Lesson Template

Programming with Python

The best way to learn how to program is to do something useful, so this introduction to Python is built around a common scientific task: data analysis.

Our real goal isn't to teach you Python, but to teach you the basic concepts that all programming depends on. We use Python in our lessons because:

- we have to use something for examples;

- it's free, well-documented, and runs almost everywhere;

- it has a large (and growing) user base among scientists; and

- experience shows that it's easier for novices to pick up than most other languages.

But the two most important things are to use whatever language your colleagues are using, so that you can share your work with them easily, and to use that language well.

We are studying inflammation in patients who have been given a new treatment for arthritis, and need to analyze the first dozen data sets of their daily inflammation. The data sets are stored in comma-separated values (CSV) format: each row holds information for a single patient, and the columns represent successive days. The first few rows of our first file look like this:

0,0,1,3,1,2,4,7,8,3,3,3,10,5,7,4,7,7,12,18,6,13,11,11,7,7,4,6,8,8,4,4,5,7,3,4,2,3,0,0

0,1,2,1,2,1,3,2,2,6,10,11,5,9,4,4,7,16,8,6,18,4,12,5,12,7,11,5,11,3,3,5,4,4,5,5,1,1,0,1

0,1,1,3,3,2,6,2,5,9,5,7,4,5,4,15,5,11,9,10,19,14,12,17,7,12,11,7,4,2,10,5,4,2,2,3,2,2,1,1

0,0,2,0,4,2,2,1,6,7,10,7,9,13,8,8,15,10,10,7,17,4,4,7,6,15,6,4,9,11,3,5,6,3,3,4,2,3,2,1

0,1,1,3,3,1,3,5,2,4,4,7,6,5,3,10,8,10,6,17,9,14,9,7,13,9,12,6,7,7,9,6,3,2,2,4,2,0,1,1We want to:

- load that data into memory,

- calculate the average inflammation per day across all patients, and

- plot the result.

To do all that, we'll have to learn a little bit about programming.

Prerequisites

Learners need to understand the concepts of files and directories (including the working directory) and how to start a Python interpreter before tackling this lesson. This lesson references the Jupyter (IPython) Notebook although it can be taught through any Python interpreter. The commands in this this lesson pertain to Python 2.7.

Getting ready

You need to download some files to follow this lesson:

- Make a new folder in your Desktop called

python-novice-inflammation. - Download python-novice-inflammation-data.zip and move the file to this folder.

- If it's not unzipped yet, double-click on it to unzip it. You should end up with a new folder called

data. - You can access this folder from the Unix shell with:

$ cd && cd Desktop/python-novice-inflammation/dataTopics

- Analyzing Patient Data

- Repeating Actions with Loops

- Storing Multiple Values in Lists

- Analyzing Data from Multiple Files

- Making Choices

- Creating Functions

- Errors and Exceptions

- Defensive Programming

- Debugging

- Command-Line Programs

Other Resources

Analyzing Patient Data

Learning Objectives

- Explain what a library is, and what libraries are used for.

- Load a Python library and use the things it contains.

- Read tabular data from a file into a program.

- Assign values to variables.

- Select individual values and subsections from data.

- Perform operations on arrays of data.

- Display simple graphs.

Words are useful, but what's more useful are the sentences and stories we build with them. Similarly, while a lot of powerful tools are built into languages like Python, even more live in the libraries they are used to build.

In order to load our inflammation data, we need to import a library called NumPy. In general you should use this library if you want to do fancy things with numbers, especially if you have matrices or arrays. We can load NumPy using:

import numpyImporting a library is like getting a piece of lab equipment out of a storage locker and setting it up on the bench. Once you've loaded the library, we can ask the library to read our data file for us:

numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')array([[ 0., 0., 1., ..., 3., 0., 0.],

[ 0., 1., 2., ..., 1., 0., 1.],

[ 0., 1., 1., ..., 2., 1., 1.],

...,

[ 0., 1., 1., ..., 1., 1., 1.],

[ 0., 0., 0., ..., 0., 2., 0.],

[ 0., 0., 1., ..., 1., 1., 0.]])The expression numpy.loadtxt(...) is a function call that asks Python to run the function loadtxt that belongs to the numpy library. This dotted notation is used everywhere in Python to refer to the parts of things as thing.component.

numpy.loadtxt has two parameters: the name of the file we want to read, and the delimiter that separates values on a line. These both need to be character strings (or strings for short), so we put them in quotes.

When we are finished typing and press Shift+Enter, the notebook runs our command. Since we haven't told it to do anything else with the function's output, the notebook displays it. In this case, that output is the data we just loaded. By default, only a few rows and columns are shown (with ... to omit elements when displaying big arrays). To save space, Python displays numbers as 1. instead of 1.0 when there's nothing interesting after the decimal point.

Our call to numpy.loadtxt read our file, but didn't save the data in memory. To do that, we need to assign the array to a variable. A variable is just a name for a value, such as x, current_temperature, or subject_id. Python's variables must begin with a letter and are case sensitive. We can create a new variable by assigning a value to it using =. As an illustration, let's step back and instead of considering a table of data, consider the simplest "collection" of data, a single value. The line below assigns the value 55 to a variable weight_kg:

weight_kg = 55Once a variable has a value, we can print it to the screen:

print weight_kg55and do arithmetic with it:

print 'weight in pounds:', 2.2 * weight_kgweight in pounds: 121.0We can also change a variable's value by assigning it a new one:

weight_kg = 57.5

print 'weight in kilograms is now:', weight_kgweight in kilograms is now: 57.5As the example above shows, we can print several things at once by separating them with commas.

If we imagine the variable as a sticky note with a name written on it, assignment is like putting the sticky note on a particular value:

Variables as Sticky Notes

This means that assigning a value to one variable does not change the values of other variables. For example, let's store the subject's weight in pounds in a variable:

weight_lb = 2.2 * weight_kg

print 'weight in kilograms:', weight_kg, 'and in pounds:', weight_lbweight in kilograms: 57.5 and in pounds: 126.5Creating Another Variable

and then change weight_kg:

weight_kg = 100.0

print 'weight in kilograms is now:', weight_kg, 'and weight in pounds is still:', weight_lbweight in kilograms is now: 100.0 and weight in pounds is still: 126.5Updating a Variable

Since weight_lb doesn't "remember" where its value came from, it isn't automatically updated when weight_kg changes. This is different from the way spreadsheets work.

Just as we can assign a single value to a variable, we can also assign an array of values to a variable using the same syntax. Let's re-run numpy.loadtxt and save its result:

data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')This statement doesn't produce any output because assignment doesn't display anything. If we want to check that our data has been loaded, we can print the variable's value:

print data[[ 0. 0. 1. ..., 3. 0. 0.]

[ 0. 1. 2. ..., 1. 0. 1.]

[ 0. 1. 1. ..., 2. 1. 1.]

...,

[ 0. 1. 1. ..., 1. 1. 1.]

[ 0. 0. 0. ..., 0. 2. 0.]

[ 0. 0. 1. ..., 1. 1. 0.]]Now that our data is in memory, we can start doing things with it. First, let's ask what type of thing data refers to:

print type(data)<type 'numpy.ndarray'>The output tells us that data currently refers to an N-dimensional array created by the NumPy library. These data corresponds to arthritis patient's inflammation. The rows are the individual patients and the columns are there daily inflammation measurements. We can see what its shape is like this:

print data.shape(60, 40)This tells us that data has 60 rows and 40 columns. When we created the variable data to store our arthritis data, we didn't just create the array, we also created information about the array, called members or attributes. This extra information describes data in the same way an adjective describes a noun. data.shape is an attribute of data which described the dimensions of data. We use the same dotted notation for the attributes of variables that we use for the functions in libraries because they have the same part-and-whole relationship.

If we want to get a single number from the array, we must provide an index in square brackets, just as we do in math:

print 'first value in data:', data[0, 0]first value in data: 0.0print 'middle value in data:', data[30, 20]middle value in data: 13.0The expression data[30, 20] may not surprise you, but data[0, 0] might. Programming languages like Fortran and MATLAB start counting at 1, because that's what human beings have done for thousands of years. Languages in the C family (including C++, Java, Perl, and Python) count from 0 because that's simpler for computers to do. As a result, if we have an M×N array in Python, its indices go from 0 to M-1 on the first axis and 0 to N-1 on the second. It takes a bit of getting used to, but one way to remember the rule is that the index is how many steps we have to take from the start to get the item we want.

An index like [30, 20] selects a single element of an array, but we can select whole sections as well. For example, we can select the first ten days (columns) of values for the first four patients (rows) like this:

print data[0:4, 0:10][[ 0. 0. 1. 3. 1. 2. 4. 7. 8. 3.]

[ 0. 1. 2. 1. 2. 1. 3. 2. 2. 6.]

[ 0. 1. 1. 3. 3. 2. 6. 2. 5. 9.]

[ 0. 0. 2. 0. 4. 2. 2. 1. 6. 7.]]The slice 0:4 means, "Start at index 0 and go up to, but not including, index 4." Again, the up-to-but-not-including takes a bit of getting used to, but the rule is that the difference between the upper and lower bounds is the number of values in the slice.

We don't have to start slices at 0:

print data[5:10, 0:10][[ 0. 0. 1. 2. 2. 4. 2. 1. 6. 4.]

[ 0. 0. 2. 2. 4. 2. 2. 5. 5. 8.]

[ 0. 0. 1. 2. 3. 1. 2. 3. 5. 3.]

[ 0. 0. 0. 3. 1. 5. 6. 5. 5. 8.]

[ 0. 1. 1. 2. 1. 3. 5. 3. 5. 8.]]We also don't have to include the upper and lower bound on the slice. If we don't include the lower bound, Python uses 0 by default; if we don't include the upper, the slice runs to the end of the axis, and if we don't include either (i.e., if we just use ':' on its own), the slice includes everything:

small = data[:3, 36:]

print 'small is:'

print smallsmall is:

[[ 2. 3. 0. 0.]

[ 1. 1. 0. 1.]

[ 2. 2. 1. 1.]]Arrays also know how to perform common mathematical operations on their values. The simplest operations with data are arithmetic: add, subtract, multiply, and divide. When you do such operations on arrays, the operation is done on each individual element of the array. Thus:

doubledata = data * 2.0will create a new array doubledata whose elements have the value of two times the value of the corresponding elements in data:

print 'original:'

print data[:3, 36:]

print 'doubledata:'

print doubledata[:3, 36:]original:

[[ 2. 3. 0. 0.]

[ 1. 1. 0. 1.]

[ 2. 2. 1. 1.]]

doubledata:

[[ 4. 6. 0. 0.]

[ 2. 2. 0. 2.]

[ 4. 4. 2. 2.]]If, instead of taking an array and doing arithmetic with a single value (as above) you did the arithmetic operation with another array of the same shape, the operation will be done on corresponding elements of the two arrays. Thus:

tripledata = doubledata + datawill give you an array where tripledata[0,0] will equal doubledata[0,0] plus data[0,0], and so on for all other elements of the arrays.

print 'tripledata:'

print tripledata[:3, 36:]tripledata:

[[ 6. 9. 0. 0.]

[ 3. 3. 0. 3.]

[ 6. 6. 3. 3.]]Often, we want to do more than add, subtract, multiply, and divide values of data. Arrays also know how to do more complex operations on their values. If we want to find the average inflammation for all patients on all days, for example, we can just ask the array for its mean value

print data.mean()6.14875mean is a method of the array, i.e., a function that belongs to it in the same way that the member shape does. If variables are nouns, methods are verbs: they are what the thing in question knows how to do. We need empty parentheses for data.mean(), even when we're not passing in any parameters, to tell Python to go and do something for us. data.shape doesn't need () because it is just a description but data.mean() requires the () because it is an action.

NumPy arrays have lots of useful methods:

print 'maximum inflammation:', data.max()

print 'minimum inflammation:', data.min()

print 'standard deviation:', data.std()maximum inflammation: 20.0

minimum inflammation: 0.0

standard deviation: 4.61383319712When analyzing data, though, we often want to look at partial statistics, such as the maximum value per patient or the average value per day. One way to do this is to create a new temporary array of the data we want, then ask it to do the calculation:

patient_0 = data[0, :] # 0 on the first axis, everything on the second

print 'maximum inflammation for patient 0:', patient_0.max()maximum inflammation for patient 0: 18.0We don't actually need to store the row in a variable of its own. Instead, we can combine the selection and the method call:

print 'maximum inflammation for patient 2:', data[2, :].max()maximum inflammation for patient 2: 19.0What if we need the maximum inflammation for all patients (as in the next diagram on the left), or the average for each day (as in the diagram on the right)? As the diagram below shows, we want to perform the operation across an axis:

Operations Across Axes

To support this, most array methods allow us to specify the axis we want to work on. If we ask for the average across axis 0 (rows in our 2D example), we get:

print data.mean(axis=0)[ 0. 0.45 1.11666667 1.75 2.43333333 3.15

3.8 3.88333333 5.23333333 5.51666667 5.95 5.9

8.35 7.73333333 8.36666667 9.5 9.58333333

10.63333333 11.56666667 12.35 13.25 11.96666667

11.03333333 10.16666667 10. 8.66666667 9.15 7.25

7.33333333 6.58333333 6.06666667 5.95 5.11666667 3.6

3.3 3.56666667 2.48333333 1.5 1.13333333

0.56666667]As a quick check, we can ask this array what its shape is:

print data.mean(axis=0).shape(40,)The expression (40,) tells us we have an N×1 vector, so this is the average inflammation per day for all patients. If we average across axis 1 (columns in our 2D example), we get:

print data.mean(axis=1)[ 5.45 5.425 6.1 5.9 5.55 6.225 5.975 6.65 6.625 6.525

6.775 5.8 6.225 5.75 5.225 6.3 6.55 5.7 5.85 6.55

5.775 5.825 6.175 6.1 5.8 6.425 6.05 6.025 6.175 6.55

6.175 6.35 6.725 6.125 7.075 5.725 5.925 6.15 6.075 5.75

5.975 5.725 6.3 5.9 6.75 5.925 7.225 6.15 5.95 6.275 5.7

6.1 6.825 5.975 6.725 5.7 6.25 6.4 7.05 5.9 ]which is the average inflammation per patient across all days.

The mathematician Richard Hamming once said, "The purpose of computing is insight, not numbers," and the best way to develop insight is often to visualize data. Visualization deserves an entire lecture (or course) of its own, but we can explore a few features of Python's matplotlib library here. While there is no "official" plotting library, this package is the de facto standard. First, we will import the pyplot module from matplotlib and use two of its functions to create and display a heat map of our data:

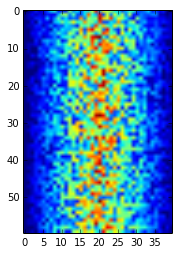

import matplotlib.pyplot

image = matplotlib.pyplot.imshow(data)

matplotlib.pyplot.show(image)

Heatmap of the Data

Blue regions in this heat map are low values, while red shows high values. As we can see, inflammation rises and falls over a 40-day period.

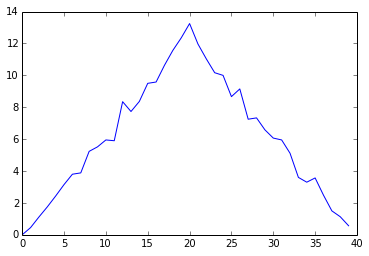

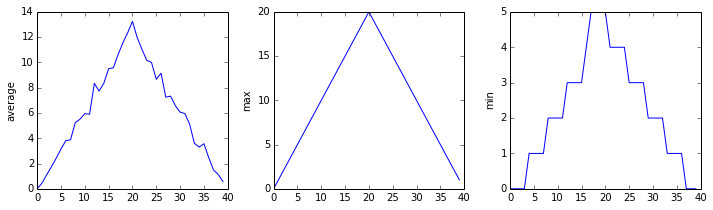

Let's take a look at the average inflammation over time:

ave_inflammation = data.mean(axis=0)

ave_plot = matplotlib.pyplot.plot(ave_inflammation)

matplotlib.pyplot.show(ave_plot)

Average Inflammation Over Time

Here, we have put the average per day across all patients in the variable ave_inflammation, then asked matplotlib.pyplot to create and display a line graph of those values. The result is roughly a linear rise and fall, which is suspicious: based on other studies, we expect a sharper rise and slower fall. Let's have a look at two other statistics:

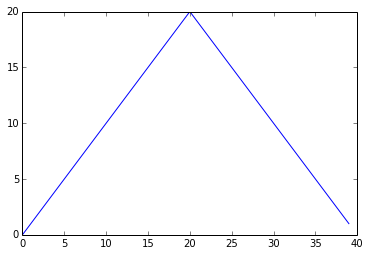

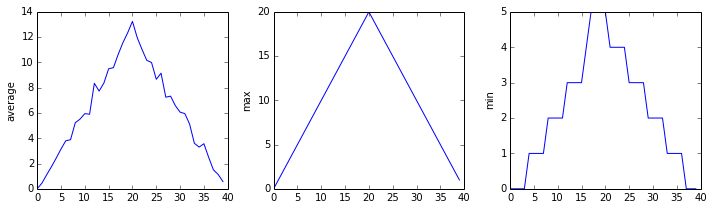

max_plot = matplotlib.pyplot.plot(data.max(axis=0))

matplotlib.pyplot.show(max_plot)

Maximum Value Along The First Axis

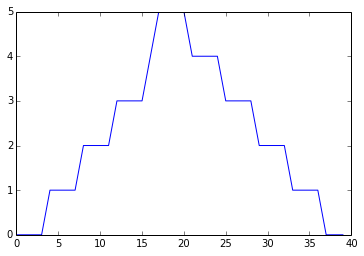

min_plot = matplotlib.pyplot.plot(data.min(axis=0))

matplotlib.pyplot.show(min_plot)

Minimum Value Along The First Axis

The maximum value rises and falls perfectly smoothly, while the minimum seems to be a step function. Neither result seems particularly likely, so either there's a mistake in our calculations or something is wrong with our data.

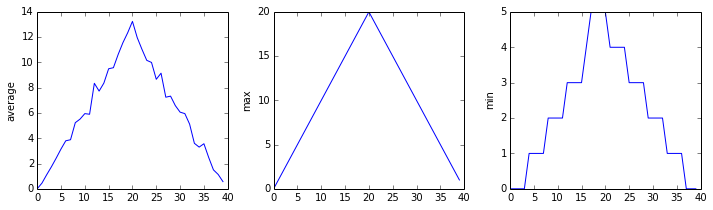

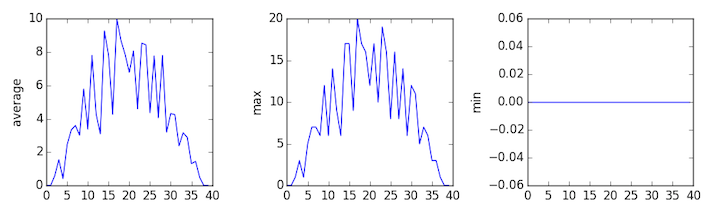

You can group similar plots in a single figure using subplots. This script below uses a number of new commands. The function matplotlib.pyplot.figure() creates a space into which we will place all of our plots. The parameter figsize tells Python how big to make this space. Each subplot is placed into the figure using the subplot command. The subplot command takes 3 parameters. The first denotes how many total rows of subplots there are, the second parameter refers to the total number of subplot columns, and the final parameters denotes which subplot your variable is referencing. Each subplot is stored in a different variable (axes1, axes2, axes3). Once a subplot is created, the axes are can be titled using the set_xlabel() command (or set_ylabel()). Here are our three plots side by side:

import numpy

import matplotlib.pyplot

data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')

fig = matplotlib.pyplot.figure(figsize=(10.0, 3.0))

axes1 = fig.add_subplot(1, 3, 1)

axes2 = fig.add_subplot(1, 3, 2)

axes3 = fig.add_subplot(1, 3, 3)

axes1.set_ylabel('average')

axes1.plot(data.mean(axis=0))

axes2.set_ylabel('max')

axes2.plot(data.max(axis=0))

axes3.set_ylabel('min')

axes3.plot(data.min(axis=0))

fig.tight_layout()

matplotlib.pyplot.show(fig)

The Previous Plots as Subplots

The call to loadtxt reads our data, and the rest of the program tells the plotting library how large we want the figure to be, that we're creating three sub-plots, what to draw for each one, and that we want a tight layout. (Perversely, if we leave out that call to fig.tight_layout(), the graphs will actually be squeezed together more closely.)

Check your understanding

Draw diagrams showing what variables refer to what values after each statement in the following program:

mass = 47.5

age = 122

mass = mass * 2.0

age = age - 20Sorting out references

What does the following program print out?

first, second = 'Grace', 'Hopper'

third, fourth = second, first

print third, fourthSlicing strings

A section of an array is called a slice. We can take slices of character strings as well:

element = 'oxygen'

print 'first three characters:', element[0:3]

print 'last three characters:', element[3:6]first three characters: oxy

last three characters: genWhat is the value of element[:4]? What about element[4:]? Or element[:]?

What is element[-1]? What is element[-2]? Given those answers, explain what element[1:-1] does.

Thin slices

The expression element[3:3] produces an empty string, i.e., a string that contains no characters. If data holds our array of patient data, what does data[3:3, 4:4] produce? What about data[3:3, :]?

Check your understanding: plot scaling

Why do all of our plots stop just short of the upper end of our graph?

Check your understanding: drawing straight lines

Why are the vertical lines in our plot of the minimum inflammation per day not perfectly vertical?

Make your own plot

Create a plot showing the standard deviation (numpy.std) of the inflammation data for each day across all patients.

Moving plots around

Modify the program to display the three plots on top of one another instead of side by side.

Repeating Actions with Loops

Learning Objectives

- Explain what a for loop does.

- Correctly write for loops to repeat simple calculations.

- Trace changes to a loop variable as the loop runs.

- Trace changes to other variables as they are updated by a for loop.

In the last lesson, we wrote some code that plots some values of interest from our first inflammation dataset, and reveals some suspicious features in it, such as from inflammation-01.csv

but we have a dozen data sets right now and more on the way. We want to create plots for all our data sets with a single statement. To do that, we'll have to teach the computer how to repeat things.

An example task that we might want to repeat is printing each character in a word on a line of its own. One way to do this would be to use a series of print statements:

word = 'lead'

print word[0]

print word[1]

print word[2]

print word[3]l

e

a

dbut that's a bad approach for two reasons:

It doesn't scale: if we want to print the characters in a string that's hundreds of letters long, we'd be better off just typing them in.

It's fragile: if we give it a longer string, it only prints part of the data, and if we give it a shorter one, it produces an error because we're asking for characters that don't exist.

word = 'tin'

print word[0]

print word[1]

print word[2]

print word[3]t

i

n---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

<ipython-input-3-7974b6cdaf14> in <module>()

3 print word[1]

4 print word[2]

----> 5 print word[3]

IndexError: string index out of rangeHere's a better approach:

word = 'lead'

for char in word:

print charl

e

a

dThis is shorter---certainly shorter than something that prints every character in a hundred-letter string---and more robust as well:

word = 'oxygen'

for char in word:

print charo

x

y

g

e

nThe improved version of print_characters uses a for loop to repeat an operation---in this case, printing---once for each thing in a collection. The general form of a loop is:

for variable in collection:

do things with variableWe can call the loop variable anything we like, but there must be a colon at the end of the line starting the loop, and we must indent anything we want to run inside the loop. Unlike many other languages, there is no command to end a loop (e.g. end for); what is indented after the for statement belongs to the loop.

Here's another loop that repeatedly updates a variable:

length = 0

for vowel in 'aeiou':

length = length + 1

print 'There are', length, 'vowels'There are 5 vowelsIt's worth tracing the execution of this little program step by step. Since there are five characters in 'aeiou', the statement on line 3 will be executed five times. The first time around, length is zero (the value assigned to it on line 1) and vowel is 'a'. The statement adds 1 to the old value of length, producing 1, and updates length to refer to that new value. The next time around, vowel is 'e' and length is 1, so length is updated to be 2. After three more updates, length is 5; since there is nothing left in 'aeiou' for Python to process, the loop finishes and the print statement on line 4 tells us our final answer.

Note that a loop variable is just a variable that's being used to record progress in a loop. It still exists after the loop is over, and we can re-use variables previously defined as loop variables as well:

letter = 'z'

for letter in 'abc':

print letter

print 'after the loop, letter is', lettera

b

c

after the loop, letter is cNote also that finding the length of a string is such a common operation that Python actually has a built-in function to do it called len:

print len('aeiou')5len is much faster than any function we could write ourselves, and much easier to read than a two-line loop; it will also give us the length of many other things that we haven't met yet, so we should always use it when we can.

From 1 to N

Python has a built-in function called range that creates a list of numbers. Range can accept 1-3 parameters. If one parameter is input, range creates an array of that length, starting at zero and incrementing by 1. If 2 parameters are input, range starts at the first and ends at the second, incrementing by one. If range is passed 3 parameters, it stars at the first one, ends at the second one, and increments by the third one. For example: range(3) produces [0, 1, 2], range(2, 5) produces [2, 3, 4]. Using range, write a loop that uses range to print the first 3 natural numbers:

1

2

3Computing powers with loops

Exponentiation is built into Python:

print 5 ** 3

125Write a loop that calculates the same result as 5 ** 3 using multiplication (and without exponentiation).

Reverse a string

Write a loop that takes a string, and produces a new string with the characters in reverse order, so 'Newton' becomes 'notweN'.

Storing Multiple Values in Lists

Learning Objectives

- Explain what a list is.

- Create and index lists of simple values.

Just as a for loop is a way to do operations many times, a list is a way to store many values. Unlike NumPy arrays, lists are built into the language (so we don't have to load a library to use them). We create a list by putting values inside square brackets:

odds = [1, 3, 5, 7]

print 'odds are:', oddsodds are: [1, 3, 5, 7]We select individual elements from lists by indexing them:

print 'first and last:', odds[0], odds[-1]first and last: 1 7and if we loop over a list, the loop variable is assigned elements one at a time:

for number in odds:

print number1

3

5

7There is one important difference between lists and strings: we can change the values in a list, but we cannot change the characters in a string. For example:

names = ['Newton', 'Darwing', 'Turing'] # typo in Darwin's name

print 'names is originally:', names

names[1] = 'Darwin' # correct the name

print 'final value of names:', namesnames is originally: ['Newton', 'Darwing', 'Turing']

final value of names: ['Newton', 'Darwin', 'Turing']works, but:

name = 'Bell'

name[0] = 'b'---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-8-220df48aeb2e> in <module>()

1 name = 'Bell'

----> 2 name[0] = 'b'

TypeError: 'str' object does not support item assignmentdoes not.

There are many ways to change the contents of lists besides assigning new values to individual elements:

odds.append(11)

print 'odds after adding a value:', oddsodds after adding a value: [1, 3, 5, 7, 11]del odds[0]

print 'odds after removing the first element:', oddsodds after removing the first element: [3, 5, 7, 11]odds.reverse()

print 'odds after reversing:', oddsodds after reversing: [11, 7, 5, 3]While modifying in place, it is useful to remember that python treats lists in a slightly counterintuitive way.

If we make a list and (attempt to) copy it then modify in place, we can cause all sorts of trouble:

odds = [1, 3, 5, 7]

primes = odds

primes += [2]

print 'primes:', primes

print 'odds:', oddsprimes [1, 3, 5, 7, 2]

odds [1, 3, 5, 7, 2]This is because python stores a list in memory, and then can use multiple names to refer to the same list. If all we want to do is copy a (simple) list, we can use the list() command, so we do not modify a list we did not mean to:

odds = [1, 3, 5, 7]

primes = list(odds)

primes += [2]

print 'primes:', primes

print 'odds:', oddsprimes [1, 3, 5, 7, 2]

odds [1, 3, 5, 7]This is different from how variables worked in lesson 1, and more similar to how a spreadsheet works.

Turn a string into a list

Use a for-loop to convert the string "hello" into a list of letters:

["h", "e", "l", "l", "o"]Hint: You can create an empty list like this:

my_list = []Analyzing Data from Multiple Files

Learning Objectives

- Use a library function to get a list of filenames that match a simple wildcard pattern.

- Use a for loop to process multiple files.

We now have almost everything we need to process all our data files. The only thing that's missing is a library with a rather unpleasant name:

import globThe glob library contains a single function, also called glob, that finds files whose names match a pattern. We provide those patterns as strings: the character * matches zero or more characters, while ? matches any one character. We can use this to get the names of all the html files:

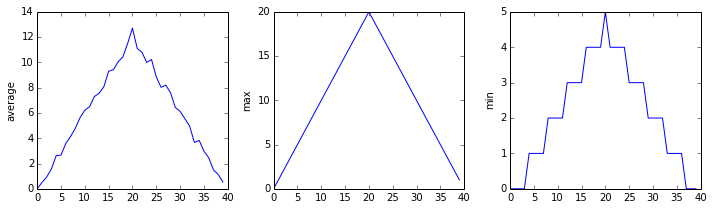

print glob.glob('*.html')['01-numpy.html', '02-loop.html', '03-lists.html', '04-files.html', '05-cond.html', '06-func.html', '07-errors.html', '08-defensive.html', '09-debugging.html', '10-cmdline.html', 'index.html', 'LICENSE.html', 'instructors.html', 'README.html', 'discussion.html', 'reference.html']As these examples show, glob.glob's result is a list of strings, which means we can loop over it to do something with each filename in turn. In our case, the "something" we want to do is generate a set of plots for each file in our inflammation dataset. Let's test it by analyzing the first three files in the list:

import numpy

import matplotlib.pyplot

filenames = glob.glob('*.csv')

filenames = filenames[0:3]

for f in filenames:

print f

data = numpy.loadtxt(fname=f, delimiter=',')

fig = matplotlib.pyplot.figure(figsize=(10.0, 3.0))

axes1 = fig.add_subplot(1, 3, 1)

axes2 = fig.add_subplot(1, 3, 2)

axes3 = fig.add_subplot(1, 3, 3)

axes1.set_ylabel('average')

axes1.plot(data.mean(axis=0))

axes2.set_ylabel('max')

axes2.plot(data.max(axis=0))

axes3.set_ylabel('min')

axes3.plot(data.min(axis=0))

fig.tight_layout()

plt.show(fig)inflammation-01.csv

inflammation-02.csv

inflammation-03.csv

Sure enough, the maxima of the first two data sets show exactly the same ramp as the first, and their minima show the same staircase structure; a different situation has been revealed in the third dataset, where the maxima are a bit less regular, but the minima are consistently zero.

Making Choices

Learning Objectives

- Explain the similarities and differences between tuples and lists.

- Write conditional statements including

if,elif, andelsebranches. - Correctly evaluate expressions containing

andandor.

In our last lesson, we discovered something suspicious was going on in our inflammation data by drawing some plots. How can we use Python to automatically recognize the different features we saw, and take a different action for each? In this lesson, we'll learn how to write code that runs only when certain conditions are true.

Conditionals

We can ask Python to take different actions, depending on a condition, with an if statement:

num = 37

if num > 100:

print 'greater'

else:

print 'not greater'

print 'done'not greater

doneThe second line of this code uses the keyword if to tell Python that we want to make a choice. If the test that follows the if statement is true, the body of the if (i.e., the lines indented underneath it) are executed. If the test is false, the body of the else is executed instead. Only one or the other is ever executed:

Conditional statements don't have to include an else. If there isn't one, Python simply does nothing if the test is false:

num = 53

print 'before conditional...'

if num > 100:

print '53 is greater than 100'

print '...after conditional'before conditional...

...after conditionalWe can also chain several tests together using elif, which is short for "else if". The following Python code uses elif to print the sign of a number.

num = -3

if num > 0:

print num, "is positive"

elif num == 0:

print num, "is zero"

else:

print num, "is negative""-3 is negative"One important thing to notice in the code above is that we use a double equals sign == to test for equality rather than a single equals sign because the latter is used to mean assignment.

We can also combine tests using and and or. and is only true if both parts are true:

if (1 > 0) and (-1 > 0):

print 'both parts are true'

else:

print 'one part is not true'one part is not truewhile or is true if at least one part is true:

if (1 < 0) or (-1 < 0):

print 'at least one test is true'at least one test is trueChecking our Data

Now that we've seen how conditionals work, we can use them to check for the suspicious features we saw in our inflammation data. In the first couple of plots, the maximum inflammation per day seemed to rise like a straight line, one unit per day. We can check for this inside the for loop we wrote with the following conditional:

if data.min(axis=0)[0] == 0 and data.max(axis=0)[20] == 20:

print 'Suspicious looking maxima!'We also saw a different problem in the third dataset; the minima per day were all zero (looks like a healthy person snuck into our study). We can also check for this with an elif condition:

elif data.min(axis=0).sum() == 0:

print 'Minima add up to zero!'And if neither of these conditions are true, we can use else to give the all-clear:

else:

print 'Seems OK!'Let's test that out:

data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')

if data.max(axis=0)[0] == 0 and data.max(axis=0)[20] == 20:

print 'Suspicious looking maxima!'

elif data.min(axis=0).sum() == 0:

print 'Minima add up to zero!'

else:

print 'Seems OK!'Suspicious looking maxima!data = numpy.loadtxt(fname='inflammation-03.csv', delimiter=',')

if data.max(axis=0)[0] == 0 and data.max(axis=0)[20] == 20:

print 'Suspicious looking maxima!'

elif data.min(axis=0).sum() == 0:

print 'Minima add up to zero!'

else:

print 'Seems OK!'Minima add up to zero!In this way, we have asked Python to do something different depending on the condition of our data. Here we printed messages in all cases, but we could also imagine not using the else catch-all so that messages are only printed when something is wrong, freeing us from having to manually examine every plot for features we've seen before.

How many paths?

Which of the following would be printed if you were to run this code? Why did you pick this answer?

- A

- B

- C

- B and C

if 4 > 5:

print 'A'

elif 4 == 5:

print 'B'

elif 4 < 5:

print 'C'What is truth?

True and False are special words in Python called booleans which represent true and false statements. However, they aren't the only values in Python that are true and false. In fact, any value can be used in an if or elif. After reading and running the code below, explain what the rule is for which values are considered true and which are considered false. (Note that if the body of a conditional is a single statement, we can write it on the same line as the if.)

if '': print 'empty string is true'

if 'word': print 'word is true'

if []: print 'empty list is true'

if [1, 2, 3]: print 'non-empty list is true'

if 0: print 'zero is true'

if 1: print 'one is true'Close enough

Write some conditions that print True if the variable a is within 10% of the variable b and False otherwise. Compare your implementation with your partner's: do you get the same answer for all possible pairs of numbers?

In-place operators

Python (and most other languages in the C family) provides in-place operators that work like this:

x = 1 # original value

x += 1 # add one to x, assigning result back to x

x *= 3 # multiply x by 3

print x6Write some code that sums the positive and negative numbers in a list separately, using in-place operators. Do you think the result is more or less readable than writing the same without in-place operators?

Tuples and exchanges

Explain what the overall effect of this code is:

left = 'L'

right = 'R'

temp = left

left = right

right = tempCompare it to:

left, right = right, leftDo they always do the same thing? Which do you find easier to read?

Creating Functions

Learning Objectives

- Define a function that takes parameters.

- Return a value from a function.

- Test and debug a function.

- Set default values for function parameters.

- Explain why we should divide programs into small, single-purpose functions.

At this point, we've written code to draw some interesting features in our inflammation data, loop over all our data files to quickly draw these plots for each of them, and have Python make decisions based on what it sees in our data. But, our code is getting pretty long and complicated; what if we had thousands of datasets, and didn't want to generate a figure for every single one? Commenting out the figure-drawing code is a nuisance. Also, what if we want to use that code again, on a different dataset or at a different point in our program? Cutting and pasting it is going to make our code get very long and very repetative, very quickly. We'd like a way to package our code so that it is easier to reuse, and Python provides for this by letting us define things called 'functions' - a shorthand way of re-executing longer pieces of code.

Let's start by defining a function fahr_to_kelvin that converts temperatures from Fahrenheit to Kelvin:

def fahr_to_kelvin(temp):

return ((temp - 32) * (5/9)) + 273.15The function definition opens with the word def, which is followed by the name of the function and a parenthesized list of parameter names. The body of the function --- the statements that are executed when it runs --- is indented below the definition line, typically by four spaces.

When we call the function, the values we pass to it are assigned to those variables so that we can use them inside the function. Inside the function, we use a return statement to send a result back to whoever asked for it.

Let's try running our function. Calling our own function is no different from calling any other function:

print 'freezing point of water:', fahr_to_kelvin(32)

print 'boiling point of water:', fahr_to_kelvin(212)freezing point of water: 273.15

boiling point of water: 273.15We've successfully called the function that we defined, and we have access to the value that we returned. Unfortunately, the value returned doesn't look right. What went wrong?

Debugging a Function

Debugging is when we fix a piece of code that we know is working incorrectly. In this case, we know that fahr_to_kelvin is giving us the wrong answer, so let's find out why.

For big pieces of code, there are tools called debuggers that aid in this process. Since we just have a short function, we'll debug by choosing some parameter value, breaking our function into small parts, and printing out the value of each part.

# We'll use temp = 212, the boiling point of water, which was incorrect

print "212 - 32:", 212 - 32212 - 32: 180print "(212 - 32) * (5/9):", (212 - 32) * (5/9)(212 - 32) * (5/9): 0Aha! The problem comes when we multiply by 5/9. This is because 5/9 is actually 0.

5/90Computers store numbers in one of two ways: as integers or as floating-point numbers (or floats). The first are the numbers we usually count with; the second have fractional parts. Addition, subtraction and multiplication work on both as we'd expect, but division works differently. If we divide one integer by another, we get the quotient without the remainder:

print '10/3 is:', 10/310/3 is: 3If either part of the division is a float, on the other hand, the computer creates a floating-point answer:

print '10.0/3 is:', 10.0/310.0/3 is: 3.33333333333The computer does this for historical reasons: integer operations were much faster on early machines, and this behavior is actually useful in a lot of situations. It's still confusing, though, so Python 3 produces a floating-point answer when dividing integers if it needs to. We're still using Python 2.7 in this class, though, so if we want 5/9 to give us the right answer, we have to write it as 5.0/9, 5/9.0, or some other variation.

Another way to create a floating-point answer is to explicitly tell the computer that you desire one. This is achieved by casting one of the numbers:

print 'float(10)/3 is:', float(10)/3float(10)/3 is: 3.33333333333The advantage to this method is it can be used with existing variables. Let's take a look:

a = 10

b = 3

print 'a/b is:', a/b

print 'float(a)/b is:', float(a)/ba/b is: 3

float(a)/b is: 3.33333333333Let's fix our fahr_to_kelvin function with this new knowledge:

def fahr_to_kelvin(temp):

return ((temp - 32) * (5.0/9.0)) + 273.15

print 'freezing point of water:', fahr_to_kelvin(32)

print 'boiling point of water:', fahr_to_kelvin(212)freezing point of water: 273.15

boiling point of water: 373.15Composing Functions

Now that we've seen how to turn Fahrenheit into Kelvin, it's easy to turn Kelvin into Celsius:

def kelvin_to_celsius(temp):

return temp - 273.15

print 'absolute zero in Celsius:', kelvin_to_celsius(0.0)absolute zero in Celsius: -273.15What about converting Fahrenheit to Celsius? We could write out the formula, but we don't need to. Instead, we can compose the two functions we have already created:

def fahr_to_celsius(temp):

temp_k = fahr_to_kelvin(temp)

result = kelvin_to_celsius(temp_k)

return result

print 'freezing point of water in Celsius:', fahr_to_celsius(32.0)freezing point of water in Celsius: 0.0This is our first taste of how larger programs are built: we define basic operations, then combine them in ever-large chunks to get the effect we want. Real-life functions will usually be larger than the ones shown here --- typically half a dozen to a few dozen lines --- but they shouldn't ever be much longer than that, or the next person who reads it won't be able to understand what's going on.

Tidying up

Now that we know how to wrap bits of code up in functions, we can make our inflammation analyasis easier to read and easier to reuse. First, let's make an analyze function that generates our plots:

def analyze(filename):

data = np.loadtxt(fname=filename, delimiter=',')

fig = plt.figure(figsize=(10.0, 3.0))

axes1 = fig.add_subplot(1, 3, 1)

axes2 = fig.add_subplot(1, 3, 2)

axes3 = fig.add_subplot(1, 3, 3)

axes1.set_ylabel('average')

axes1.plot(data.mean(axis=0))

axes2.set_ylabel('max')

axes2.plot(data.max(axis=0))

axes3.set_ylabel('min')

axes3.plot(data.min(axis=0))

fig.tight_layout()

plt.show(fig)and another function called detect_problems that checks for those systematics we noticed:

def detect_problems(filename):

data = np.loadtxt(fname=filename, delimiter=',')

if data.max(axis=0)[0] == 0 and data.max(axis=0)[20] == 20:

print 'Suspicious looking maxima!'

elif data.min(axis=0).sum() == 0:

print 'Minima add up to zero!'

else:

print 'Seems OK!'Notice that rather than jumbling this code together in one giant for loop, we can now read and reuse both ideas separately. We can reproduce the previous analysis with a much simpler for loop:

for f in filenames[:3]:

print f

analyze(f)

detect_problems(f)By giving our functions human-readable names, we can more easily read and understand what is happening in the for loop. Even better, if at some later date we want to use either of those pieces of code again, we can do so in a single line.

Testing and Documenting

Once we start putting things in functions so that we can re-use them, we need to start testing that those functions are working correctly. To see how to do this, let's write a function to center a dataset around a particular value:

def center(data, desired):

return (data - data.mean()) + desiredWe could test this on our actual data, but since we don't know what the values ought to be, it will be hard to tell if the result was correct. Instead, let's use NumPy to create a matrix of 0's and then center that around 3:

z = numpy.zeros((2,2))

print center(z, 3)[[ 3. 3.]

[ 3. 3.]]That looks right, so let's try center on our real data:

data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')

print center(data, 0)[[-6.14875 -6.14875 -5.14875 ..., -3.14875 -6.14875 -6.14875]

[-6.14875 -5.14875 -4.14875 ..., -5.14875 -6.14875 -5.14875]

[-6.14875 -5.14875 -5.14875 ..., -4.14875 -5.14875 -5.14875]

...,

[-6.14875 -5.14875 -5.14875 ..., -5.14875 -5.14875 -5.14875]

[-6.14875 -6.14875 -6.14875 ..., -6.14875 -4.14875 -6.14875]

[-6.14875 -6.14875 -5.14875 ..., -5.14875 -5.14875 -6.14875]]It's hard to tell from the default output whether the result is correct, but there are a few simple tests that will reassure us:

print 'original min, mean, and max are:', data.min(), data.mean(), data.max()

centered = center(data, 0)

print 'min, mean, and and max of centered data are:', centered.min(), centered.mean(), centered.max()original min, mean, and max are: 0.0 6.14875 20.0

min, mean, and and max of centered data are: -6.14875 -3.49054118942e-15 13.85125That seems almost right: the original mean was about 6.1, so the lower bound from zero is how about -6.1. The mean of the centered data isn't quite zero --- we'll explore why not in the challenges --- but it's pretty close. We can even go further and check that the standard deviation hasn't changed:

print 'std dev before and after:', data.std(), centered.std()std dev before and after: 4.61383319712 4.61383319712Those values look the same, but we probably wouldn't notice if they were different in the sixth decimal place. Let's do this instead:

print 'difference in standard deviations before and after:', data.std() - centered.std()difference in standard deviations before and after: -3.5527136788e-15Again, the difference is very small. It's still possible that our function is wrong, but it seems unlikely enough that we should probably get back to doing our analysis. We have one more task first, though: we should write some documentation for our function to remind ourselves later what it's for and how to use it.

The usual way to put documentation in software is to add comments like this:

# center(data, desired): return a new array containing the original data centered around the desired value.

def center(data, desired):

return (data - data.mean()) + desiredThere's a better way, though. If the first thing in a function is a string that isn't assigned to a variable, that string is attached to the function as its documentation:

def center(data, desired):

'''Return a new array containing the original data centered around the desired value.'''

return (data - data.mean()) + desiredThis is better because we can now ask Python's built-in help system to show us the documentation for the function:

help(center)Help on function center in module __main__:

center(data, desired)

Return a new array containing the original data centered around the desired value.A string like this is called a docstring. We don't need to use triple quotes when we write one, but if we do, we can break the string across multiple lines:

def center(data, desired):

'''Return a new array containing the original data centered around the desired value.

Example: center([1, 2, 3], 0) => [-1, 0, 1]'''

return (data - data.mean()) + desired

help(center)Help on function center in module __main__:

center(data, desired)

Return a new array containing the original data centered around the desired value.

Example: center([1, 2, 3], 0) => [-1, 0, 1]Defining Defaults

We have passed parameters to functions in two ways: directly, as in type(data), and by name, as in numpy.loadtxt(fname='something.csv', delimiter=','). In fact, we can pass the filename to loadtxt without the fname=:

numpy.loadtxt('inflammation-01.csv', delimiter=',')array([[ 0., 0., 1., ..., 3., 0., 0.],

[ 0., 1., 2., ..., 1., 0., 1.],

[ 0., 1., 1., ..., 2., 1., 1.],

...,

[ 0., 1., 1., ..., 1., 1., 1.],

[ 0., 0., 0., ..., 0., 2., 0.],

[ 0., 0., 1., ..., 1., 1., 0.]])but we still need to say delimiter=:

numpy.loadtxt('inflammation-01.csv', ',')---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-26-e3bc6cf4fd6a> in <module>()

----> 1 numpy.loadtxt('inflammation-01.csv', ',')

/Users/gwilson/anaconda/lib/python2.7/site-packages/numpy/lib/npyio.pyc in loadtxt(fname, dtype, comments, delimiter, converters, skiprows, usecols, unpack, ndmin)

775 try:

776 # Make sure we're dealing with a proper dtype

--> 777 dtype = np.dtype(dtype)

778 defconv = _getconv(dtype)

779

TypeError: data type "," not understoodTo understand what's going on, and make our own functions easier to use, let's re-define our center function like this:

def center(data, desired=0.0):

'''Return a new array containing the original data centered around the desired value (0 by default).

Example: center([1, 2, 3], 0) => [-1, 0, 1]'''

return (data - data.mean()) + desiredThe key change is that the second parameter is now written desired=0.0 instead of just desired. If we call the function with two arguments, it works as it did before:

test_data = numpy.zeros((2, 2))

print center(test_data, 3)[[ 3. 3.]

[ 3. 3.]]But we can also now call it with just one parameter, in which case desired is automatically assigned the default value of 0.0:

more_data = 5 + numpy.zeros((2, 2))

print 'data before centering:'

print more_data

print 'centered data:'

print center(more_data)data before centering:

[[ 5. 5.]

[ 5. 5.]]

centered data:

[[ 0. 0.]

[ 0. 0.]]This is handy: if we usually want a function to work one way, but occasionally need it to do something else, we can allow people to pass a parameter when they need to but provide a default to make the normal case easier. The example below shows how Python matches values to parameters:

def display(a=1, b=2, c=3):

print 'a:', a, 'b:', b, 'c:', c

print 'no parameters:'

display()

print 'one parameter:'

display(55)

print 'two parameters:'

display(55, 66)no parameters:

a: 1 b: 2 c: 3

one parameter:

a: 55 b: 2 c: 3

two parameters:

a: 55 b: 66 c: 3As this example shows, parameters are matched up from left to right, and any that haven't been given a value explicitly get their default value. We can override this behavior by naming the value as we pass it in:

print 'only setting the value of c'

display(c=77)only setting the value of c

a: 1 b: 2 c: 77With that in hand, let's look at the help for numpy.loadtxt:

help(numpy.loadtxt)Help on function loadtxt in module numpy.lib.npyio:

loadtxt(fname, dtype=<type 'float'>, comments='#', delimiter=None, converters=None, skiprows=0, usecols=None, unpack=False, ndmin=0)

Load data from a text file.

Each row in the text file must have the same number of values.

Parameters

----------

fname : file or str

File, filename, or generator to read. If the filename extension is

``.gz`` or ``.bz2``, the file is first decompressed. Note that

generators should return byte strings for Python 3k.

dtype : data-type, optional

Data-type of the resulting array; default: float. If this is a

record data-type, the resulting array will be 1-dimensional, and

each row will be interpreted as an element of the array. In this

case, the number of columns used must match the number of fields in

the data-type.

comments : str, optional

The character used to indicate the start of a comment;

default: '#'.

delimiter : str, optional

The string used to separate values. By default, this is any

whitespace.

converters : dict, optional

A dictionary mapping column number to a function that will convert

that column to a float. E.g., if column 0 is a date string:

``converters = {0: datestr2num}``. Converters can also be used to

provide a default value for missing data (but see also `genfromtxt`):

``converters = {3: lambda s: float(s.strip() or 0)}``. Default: None.

skiprows : int, optional

Skip the first `skiprows` lines; default: 0.

usecols : sequence, optional

Which columns to read, with 0 being the first. For example,

``usecols = (1,4,5)`` will extract the 2nd, 5th and 6th columns.

The default, None, results in all columns being read.

unpack : bool, optional

If True, the returned array is transposed, so that arguments may be

unpacked using ``x, y, z = loadtxt(...)``. When used with a record

data-type, arrays are returned for each field. Default is False.

ndmin : int, optional

The returned array will have at least `ndmin` dimensions.

Otherwise mono-dimensional axes will be squeezed.

Legal values: 0 (default), 1 or 2.

.. versionadded:: 1.6.0

Returns

-------

out : ndarray

Data read from the text file.

See Also

--------

load, fromstring, fromregex

genfromtxt : Load data with missing values handled as specified.

scipy.io.loadmat : reads MATLAB data files

Notes

-----

This function aims to be a fast reader for simply formatted files. The

`genfromtxt` function provides more sophisticated handling of, e.g.,

lines with missing values.

Examples

--------

>>> from StringIO import StringIO # StringIO behaves like a file object

>>> c = StringIO("0 1\n2 3")

>>> np.loadtxt(c)

array([[ 0., 1.],

[ 2., 3.]])

>>> d = StringIO("M 21 72\nF 35 58")

>>> np.loadtxt(d, dtype={'names': ('gender', 'age', 'weight'),

... 'formats': ('S1', 'i4', 'f4')})

array([('M', 21, 72.0), ('F', 35, 58.0)],

dtype=[('gender', '|S1'), ('age', '<i4'), ('weight', '<f4')])

>>> c = StringIO("1,0,2\n3,0,4")

>>> x, y = np.loadtxt(c, delimiter=',', usecols=(0, 2), unpack=True)

>>> x

array([ 1., 3.])

>>> y

array([ 2., 4.])There's a lot of information here, but the most important part is the first couple of lines:

loadtxt(fname, dtype=<type 'float'>, comments='#', delimiter=None, converters=None, skiprows=0, usecols=None,

unpack=False, ndmin=0)This tells us that loadtxt has one parameter called fname that doesn't have a default value, and eight others that do. If we call the function like this:

numpy.loadtxt('inflammation-01.csv', ',')then the filename is assigned to fname (which is what we want), but the delimiter string ',' is assigned to dtype rather than delimiter, because dtype is the second parameter in the list. However ',' isn't a known dtype so our code produced an error message when we tried to run it. When we call loadtxt we don't have to provide fname= for the filename because it's the first item in the list, but if we want the ',' to be assigned to the variable delimiter, we do have to provide delimiter= for the second parameter since delimiter is not the second parameter in the list.

Combining strings

"Adding" two strings produces their concatenation: 'a' + 'b' is 'ab'. Write a function called fence that takes two parameters called original and wrapper and returns a new string that has the wrapper character at the beginning and end of the original. A call to your function should look like this:

print fence('name', '*')

*name*Selecting characters from strings

If the variable s refers to a string, then s[0] is the string's first character and s[-1] is its last. Write a function called outer that returns a string made up of just the first and last characters of its input. A call to your function should look like this:

print outer('helium')

hmRescaling an array

Write a function rescale that takes an array as input and returns a corresponding array of values scaled to lie in the range 0.0 to 1.0. (Hint: If L and H are the lowest and highest values in the original array, then the replacement for a value v should be (v − L)/(H − L).)

Testing and documenting your function

Run the commands help(numpy.arange) and help(numpy.linspace) to see how to use these functions to generate regularly-spaced values, then use those values to test your rescale function. Once you've successfully tested your function, add a docstring that explains what it does.

Defining defaults

Rewrite the rescale function so that it scales data to lie between 0.0 and 1.0 by default, but will allow the caller to specify lower and upper bounds if they want. Compare your implementation to your neighbor's: do the two functions always behave the same way?

Variables inside and outside functions

What does the following piece of code display when run - and why?

f = 0

k = 0

def f2k(f):

k = ((f-32)*(5.0/9.0)) + 273.15

return k

f2k(8)

f2k(41)

f2k(32)

print kErrors and Exceptions

Learning Objectives

- To be able to read a traceback, and determine the following relevant pieces of information:

- The file, function, and line number on which the error occurred

- The type of the error

- The error message

- To be able to describe the types of situations in which the following errors occur:

SyntaxErrorandIndentationErrorNameErrorIndexErrorIOError

Every programmer encounters errors, both those who are just beginning, and those who have been programming for years. Encountering errors and exceptions can be very frustrating at times, and can make coding feel like a hopeless endeavour. However, understanding what the different types of errors are and when you are likely to encounter them can help a lot. Once you know why you get certain types of errors, they become much easier to fix.

Errors in Python have a very specific form, called a traceback. Let's examine one:

import errors_01

errors_01.favorite_ice_cream()---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

<ipython-input-1-9d0462a5b07c> in <module>()

1 import errors_01

----> 2 errors_01.favorite_ice_cream()

/Users/jhamrick/project/swc/novice/python/errors_01.pyc in favorite_ice_cream()

5 "strawberry"

6 ]

----> 7 print ice_creams[3]

IndexError: list index out of rangeThis particular traceback has two levels. You can determine the number of levels by looking for the number of arrows on the left hand side. In this case:

The first shows code from the cell above, with an arrow pointing to Line 2 (which is

favorite_ice_cream()).The second shows some code in another function (

favorite_ice_cream, located in the fileerrors_01.py), with an arrow pointing to Line 7 (which isprint ice_creams[3]).

The last level is the actual place where the error occurred. The other level(s) show what function the program executed to get to the next level down. So, in this case, the program first performed a function call to the function favorite_ice_cream. Inside this function, the program encountered an error on Line 7, when it tried to run the code print ice_creams[3].

So what error did the program actually encounter? In the last line of the traceback, Python helpfully tells us the category or type of error (in this case, it is an IndexError) and a more detailed error message (in this case, it says "list index out of range").

If you encounter an error and don't know what it means, it is still important to read the traceback closely. That way, if you fix the error, but encounter a new one, you can tell that the error changed. Additionally, sometimes just knowing where the error occurred is enough to fix it, even if you don't entirely understand the message.

If you do encounter an error you don't recognize, try looking at the official documentation on errors. However, note that you may not always be able to find the error there, as it is possible to create custom errors. In that case, hopefully the custom error message is informative enough to help you figure out what went wrong.

Syntax Errors

When you forget a colon at the end of a line, accidentally add one space too many when indenting under an if statement, or forget a parentheses, you will encounter a syntax error. This means that Python couldn't figure out how to read your program. This is similar to forgetting punctuation in English:

this text is difficult to read there is no punctuation there is also no capitalization why is this hard because you have to figure out where each sentence ends you also have to figure out where each sentence begins to some extent it might be ambiguous if there should be a sentence break or not

People can typically figure out what is meant by text with no punctuation, but people are much smarter than computers. If Python doesn't know how to read the program, it will just give up and inform you with an error. For example:

def some_function()

msg = "hello, world!"

print msg

return msg File "<ipython-input-3-6bb841ea1423>", line 1

def some_function()

^

SyntaxError: invalid syntaxHere, Python tells us that there is a SyntaxError on line 1, and even puts a little arrow in the place where there is an issue. In this case the problem is that the function definition is missing a colon at the end.

Actually, the function above has two issues with syntax. If we fix the problem with the colon, we see that there is also an IndentationError, which means that the lines in the function definition do not all have the same indentation:

def some_function():

msg = "hello, world!"

print msg

return msg File "<ipython-input-4-ae290e7659cb>", line 4

return msg

^

IndentationError: unexpected indentBoth SyntaxError and IndentationError indicate a problem with the syntax of your program, but an IndentationError is more specific: it always means that there is a problem with how your code is indented.

Variable Name Errors

Another very common type of error is called a NameError, and occurs when you try to use a variable that does not exist. For example:

print a---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-7-9d7b17ad5387> in <module>()

----> 1 print a

NameError: name 'a' is not definedVariable name errors come with some of the most informative error messages, which are usually of the form "name 'the_variable_name' is not defined".

Why does this error message occur? That's harder question to answer, because it depends on what your code is supposed to do. However, there are a few very common reasons why you might have an undefined variable. The first is that you meant to use a string, but forgot to put quotes around it:

print hello---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-8-9553ee03b645> in <module>()

----> 1 print hello

NameError: name 'hello' is not definedThe second is that you just forgot to create the variable before using it. In the following example, count should have been defined (e.g., with count = 0) before the for loop:

for number in range(10):

count = count + number

print "The count is: " + str(count)---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-9-dd6a12d7ca5c> in <module>()

1 for number in range(10):

----> 2 count = count + number

3 print "The count is: " + str(count)

NameError: name 'count' is not definedFinally, the third possibility is that you made a typo when you were writing your code. Let's say we fixed the error above by adding the line Count = 0 before the for loop. Frustratingly, this actually does not fix the error. Remember that variables are case-sensitive, so the variable count is different from Count. We still get the same error, because we still have not defined count:

Count = 0

for number in range(10):

count = count + number

print "The count is: " + str(count)---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-10-d77d40059aea> in <module>()

1 Count = 0

2 for number in range(10):

----> 3 count = count + number

4 print "The count is: " + str(count)

NameError: name 'count' is not definedItem Errors

Next up are errors having to do with containers (like lists and dictionaries) and the items within them. If you try to access an item in a list or a dictionary that does not exist, then you will get an error. This makes sense: if you asked someone what day they would like to get coffee, and they answered "caturday", you might be a bit annoyed. Python gets similarly annoyed if you try to ask it for an item that doesn't exist:

letters = ['a', 'b', 'c']

print "Letter #1 is " + letters[0]

print "Letter #2 is " + letters[1]

print "Letter #3 is " + letters[2]

print "Letter #4 is " + letters[3]Letter #1 is a

Letter #2 is b

Letter #3 is c---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

<ipython-input-11-d817f55b7d6c> in <module>()

3 print "Letter #2 is " + letters[1]

4 print "Letter #3 is " + letters[2]

----> 5 print "Letter #4 is " + letters[3]

IndexError: list index out of rangeHere, Python is telling us that there is an IndexError in our code, meaning we tried to access a list index that did not exist.

File Errors

The last type of error we'll cover today are those associated with reading and writing files: IOError. The "IO" in IOError stands for "input/output", which is just a fancy way of saying "reading/writing". If you try to read a file that does not exist, you will recieve an IOError telling you so. This is the most common reason why you would receive IOError, and if the error messages says "no such file or directory", then you know you have just tried to access a file that does not exist:

file_handle = open('myfile.txt', 'r')---------------------------------------------------------------------------

IOError Traceback (most recent call last)

<ipython-input-14-f6e1ac4aee96> in <module>()

----> 1 file_handle = open('myfile.txt', 'r')

IOError: [Errno 2] No such file or directory: 'myfile.txt'One reason for receiving this error is that you specified an incorrect path to the file. For example, if I am currently in a folder called myproject, and I have a file in myproject/writing/myfile.txt, but I try to just open myfile.txt, this will fail. The correct path would be writing/myfile. xt. It is also possible (like with NameError) that you just made a typo.

Another issue could be that you used the "read" flag instead of the "write" flag. Python will not give you an error if you try to open a file for writing when the file does not exist. However, if you meant to open a file for reading, but accidentally opened it for writing, and then try to read from it, you will get an error telling you that the file was not opened for reading:

file_handle = open('myfile.txt', 'w')

file_handle.read()---------------------------------------------------------------------------

IOError Traceback (most recent call last)

<ipython-input-15-b846479bc61f> in <module>()

1 file_handle = open('myfile.txt', 'w')

----> 2 file_handle.read()

IOError: File not open for readingReading Error Messages

Read the traceback below, and identify the following pieces of information about it:

- How many levels does the traceback have?

- What is the file name where the error occurred?

- What is the function name where the error occurred?

- On which line number in this function did the error occurr?

- What is the type of error?

- What is the error message?

import errors_02

errors_02.print_friday_message()---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

<ipython-input-2-e4c4cbafeeb5> in <module>()

1 import errors_02

----> 2 errors_02.print_friday_message()

/Users/jhamrick/project/swc/novice/python/errors_02.py in print_friday_message()

13

14 def print_friday_message():

---> 15 print_message("Friday")

/Users/jhamrick/project/swc/novice/python/errors_02.py in print_message(day)

9 "sunday": "Aw, the weekend is almost over."

10 }

---> 11 print messages[day]

12

13

KeyError: 'Friday'Identifying Syntax Errors

- Read the code below, and (without running it) try to identify what the errors are.

- Run the code, and read the error message. Is it a

SyntaxErroror anIndentationError? - Fix the error.

- Repeat steps 2 and 3, until you have fixed all the errors.

def another_function

print "Syntax errors are annoying."

print "But at least python tells us about them!"

print "So they are usually not too hard to fix."Identifying Variable Name Errors

- Read the code below, and (without running it) try to identify what the errors are.

- Run the code, and read the error message. What type of

NameErrordo you think this is? In other words, is it a string with no quotes, a misspelled variable, or a variable that should have been defined but was not? - Fix the error.

- Repeat steps 2 and 3, until you have fixed all the errors.

for number in range(10):

# use a if the number is a multiple of 3, otherwise use b

if (Number % 3) == 0:

message = message + a

else:

message = message + "b"

print messageIdentifying Item Errors

- Read the code below, and (without running it) try to identify what the errors are.

- Run the code, and read the error message. What type of error is it?

- Fix the error.

seasons = ['Spring', 'Summer', 'Fall', 'Winter']

print 'My favorite season is ', seasons[4]Defensive Programming

Learning Objectives

- Explain what an assertion is.

- Add assertions that check the program's state is correct.

- Correctly add precondition and postcondition assertions to functions.

- Explain what test-driven development is, and use it when creating new functions.

- Explain why variables should be initialized using actual data values rather than arbitrary constants.

Our previous lessons have introduced the basic tools of programming: variables and lists, file I/O, loops, conditionals, and functions. What they haven't done is show us how to tell whether a program is getting the right answer, and how to tell if it's still getting the right answer as we make changes to it.

To achieve that, we need to:

- Write programs that check their own operation.

- Write and run tests for widely-used functions.

- Make sure we know what "correct" actually means.

The good news is, doing these things will speed up our programming, not slow it down. As in real carpentry --- the kind done with lumber --- the time saved by measuring carefully before cutting a piece of wood is much greater than the time that measuring takes.

Assertions

The first step toward getting the right answers from our programs is to assume that mistakes will happen and to guard against them. This is called defensive programming, and the most common way to do it is to add assertions to our code so that it checks itself as it runs. An assertion is simply a statement that something must be true at a certain point in a program. When Python sees one, it evaluates the assertion's condition. If it's true, Python does nothing, but if it's false, Python halts the program immediately and prints the error message if one is provided. For example, this piece of code halts as soon as the loop encounters a value that isn't positive:

numbers = [1.5, 2.3, 0.7, -0.001, 4.4]

total = 0.0

for n in numbers:

assert n > 0.0, 'Data should only contain positive values'

total += n

print 'total is:', total---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-19-33d87ea29ae4> in <module>()

2 total = 0.0

3 for n in numbers:

----> 4 assert n > 0.0, 'Data should only contain positive values'

5 total += n

6 print 'total is:', total

AssertionError: Data should only contain positive valuesPrograms like the Firefox browser are full of assertions: 10-20% of the code they contain are there to check that the other 80-90% are working correctly. Broadly speaking, assertions fall into three categories:

A precondition is something that must be true at the start of a function in order for it to work correctly.

A postcondition is something that the function guarantees is true when it finishes.

An invariant is something that is always true at a particular point inside a piece of code.

For example, suppose we are representing rectangles using a tuple of four coordinates (x0, y0, x1, y1), representing the lower left and upper right corners of the rectangle. In order to do some calculations, we need to normalize the rectangle so that the lower left corner is at the origin and the longest side is 1.0 units long. This function does that, but checks that its input is correctly formatted and that its result makes sense:

def normalize_rectangle(rect):

'''Normalizes a rectangle so that it is at the origin and 1.0 units long on its longest axis.'''

assert len(rect) == 4, 'Rectangles must contain 4 coordinates'

x0, y0, x1, y1 = rect

assert x0 < x1, 'Invalid X coordinates'

assert y0 < y1, 'Invalid Y coordinates'

dx = x1 - x0

dy = y1 - y0

if dx > dy:

scaled = float(dx) / dy

upper_x, upper_y = 1.0, scaled

else:

scaled = float(dx) / dy

upper_x, upper_y = scaled, 1.0

assert 0 < upper_x <= 1.0, 'Calculated upper X coordinate invalid'

assert 0 < upper_y <= 1.0, 'Calculated upper Y coordinate invalid'

return (0, 0, upper_x, upper_y)The preconditions on lines 2, 4, and 5 catch invalid inputs:

print normalize_rectangle( (0.0, 1.0, 2.0) ) # missing the fourth coordinate---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-21-3a97b1dcab70> in <module>()

----> 1 print normalize_rectangle( (0.0, 1.0, 2.0) ) # missing the fourth coordinate

<ipython-input-20-408dc39f3915> in normalize_rectangle(rect)

1 def normalize_rectangle(rect):

2 '''Normalizes a rectangle so that it is at the origin and 1.0 units long on its longest axis.'''

----> 3 assert len(rect) == 4, 'Rectangles must contain 4 coordinates'

4 x0, y0, x1, y1 = rect

5 assert x0 < x1, 'Invalid X coordinates'

AssertionError: Rectangles must contain 4 coordinatesprint normalize_rectangle( (4.0, 2.0, 1.0, 5.0) ) # X axis inverted---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-22-f05ae7878a45> in <module>()

----> 1 print normalize_rectangle( (4.0, 2.0, 1.0, 5.0) ) # X axis inverted

<ipython-input-20-408dc39f3915> in normalize_rectangle(rect)

3 assert len(rect) == 4, 'Rectangles must contain 4 coordinates'

4 x0, y0, x1, y1 = rect

----> 5 assert x0 < x1, 'Invalid X coordinates'

6 assert y0 < y1, 'Invalid Y coordinates'

7

AssertionError: Invalid X coordinatesThe post-conditions help us catch bugs by telling us when our calculations cannot have been correct. For example, if we normalize a rectangle that is taller than it is wide everything seems OK:

print normalize_rectangle( (0.0, 0.0, 1.0, 5.0) )(0, 0, 0.2, 1.0)but if we normalize one that's wider than it is tall, the assertion is triggered:

print normalize_rectangle( (0.0, 0.0, 5.0, 1.0) )---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-24-5f0ef7954aeb> in <module>()

----> 1 print normalize_rectangle( (0.0, 0.0, 5.0, 1.0) )

<ipython-input-20-408dc39f3915> in normalize_rectangle(rect)

16

17 assert 0 < upper_x <= 1.0, 'Calculated upper X coordinate invalid'

---> 18 assert 0 < upper_y <= 1.0, 'Calculated upper Y coordinate invalid'

19

20 return (0, 0, upper_x, upper_y)

AssertionError: Calculated upper Y coordinate invalidRe-reading our function, we realize that line 10 should divide dy by dx rather than dx by dy. (You can display line numbers by typing Ctrl-M, then L.) If we had left out the assertion at the end of the function, we would have created and returned something that had the right shape as a valid answer, but wasn't. Detecting and debugging that would almost certainly have taken more time in the long run than writing the assertion.